This article was published in The Generator on Medium.com on May 14, 2023

And how does AI feel about you?

Words carry baggage. We feel this intuitively of words that name people, individuals or groups of people. Think of words like Democrat and Republican or Trump and Biden and the descriptors that come to mind come easily. They’ll vary of course depending on your political leanings. But given your predilections, the baggage will be obvious in its heft. For some words the weight will be heavy, for some light.

But in fact all words, every word, carries some of that emotional baggage and, surprisingly, people will tend to converge on those hidden associations for words that aren’t politically or religiously loaded. This was the insight of Charles Osgood who developed a statistical procedure called the Semantic Differential to chart people’s associations for everyday words. It’s a technique that is relied on today, particularly by advertisers and marketing researchers, to cue up words for their promotions that will appeal to a target group and avoid turning it off.

This technique is not a type of word association test, of the sort used in clinical psychology.

Tell me the first thing that comes to mind when I say “mother.”

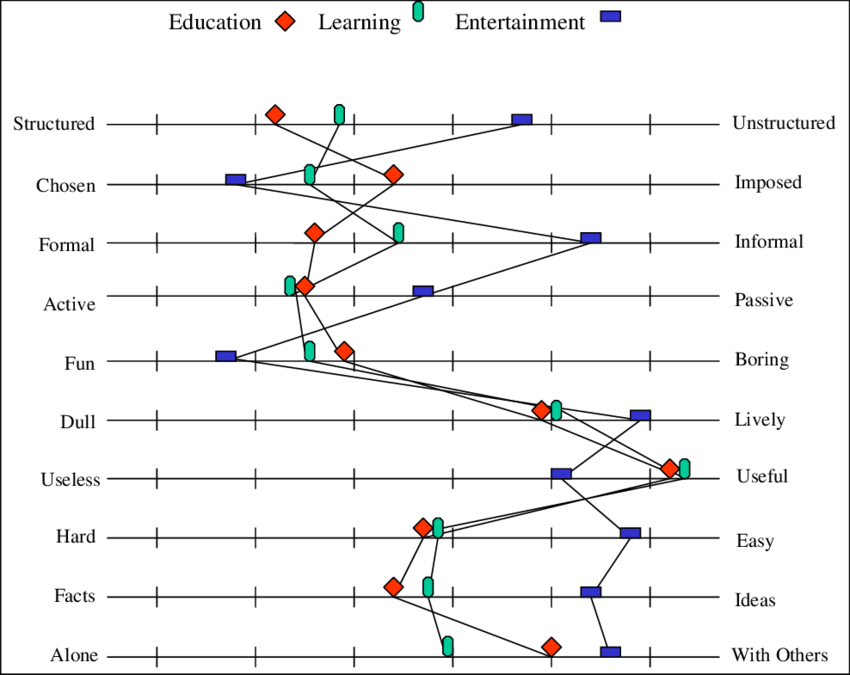

It’s based on marking points on a list of scaled and opposing adjectives as to where a content word best fits. The scale can have 5 (or more) subjective points of reference. For example, where does a content word like “education” map on a scale with poles at happy and sad? Does it fit best at Very happy…Sort of happy…Neither happy nor sad…Sort of sad…Very sad? When you present 20 or more of these opposing pairs of descriptors and summarize your results across all respondents, you’ll end up with graphic profiles of one or more content words. The head graphic for this article shows the result for three different words.

When you profile a set of words, you’d see significant differences between concepts such as “AI, mother, computer, physician,” and so on. They won’t typically show the same patterns, but you’d see significant agreement among respondents for each concept. In other words, the semantic differential is measuring something subjectively real about the underlying meanings we attach to words, even though you won’t find those meanings in the dictionary.

Osgood theorized that the range of opposing markers used in the semantic differential fall into three categories or factors. These he called evaluative, potency and activity. The prototypical evaluative adjective scale is good-bad. It’s the factor that seems to be most important in determining the prevailing or dominant attitude people have to a concept.

English has thousands of adjectives and it’s in the nature of adjectives to exist in polar opposites. We learn and practice those opposites from Kindergarten on and sharpen our understanding of them from experience, conversation, and reading. Eventually as writers we learn how to apply them reliably, matching them up to what we’re describing in an intuitive fashion. So, for example, to take a purposely oddball example, labeling someone as “pusillanimous” implies the person is not it’s opposite, that is, “plucky,” or some such word on the potency factor.

A semantic differential conducted in 2017 by a group of IEEE researchers reported on how respondents rated two types of robots: those we have at present (generalized robots) vs. those we would like to have (idealized robots). Thirty opposing adjective pairs were used.

Most of the attributes for both types of robots score in the mid range, meaning that people didn’t much feel that those attributes mattered to their subjective impressions. There were only a handful of highest rated attributes. These included friendly, honest, trustworthy, nice, reliable, competent, helpful, and rational. Of these eight, what we could call the most desirable attributes for any robot to have, the idealized robot outscored the generalized robot on seven of the eight. That is it conformed better to the positive member of the pair. In other words, people think that the robots we had in 2017 or thereabouts still had room to grow or improve to reach an ideal of “robotness.”

The only attribute where the generalized robot showed off better was rational, meaning that the friendlier and more helpful and competent and nice an idealized robot might be, its theoretical “owner” thinks it would be better if it were more emotional, that being the polar opposite of rational on the scale. A robot can’t be emotional at all, of course, so what this rating implies is that the robot should in some unstated, conjectured way “behave” or perform differently than it does now. The trick is how to specify that difference in performance we would like to see. That’s the hard part, adjusting the algorithms to get what we want.

On five of these high value scales, the hypothetical idealized robot showed significantly better scores statistically than the generalized robot. It was considered better on friendly, honest, trustworthy, nice, and competent. But on the rational to emotional scale, the statistical significance worked in favor of the generalized robot.

Should we infer from this particular measure that people would prefer their own ideal robot to reveal more of its emotional side, rather than its coldly analytic rational side? Does it mean that we’d prefer our robots to tell us little white lies because we humans want our opinions to be supported, not challenged? Or does it mean that the robot should respond sensitively, with appropriate deference to how it perceives our emotional state at the time we engaged it?

The main take-away from the study would seem to be that the people of seven years ago who took part in this study believed robots had some ways to go to improve, to make themselves more appealing. Seven years ago, of course, predates the rise of the AI chat bots currently poised to dominate our next economic cycle and possibly hasten our apocalypse, if we’re to believe the doomsayers.

I wonder, if the semantic differential test were given today, how the results would change. Would the kinds of robots people visualize today align with the kinds of idealized robots they had in mind seven years ago? Have the two graphs shifted to align with each other? Has our idealized robot of seven years ago been achieved? Or would we now be seeing yet another split where we idealize an even better robot than our current AI bots?

Would the dire prospects, so often reported, about bots taking our jobs and making a mockery of the years of education and training it took to get our doctorates and certificates mean that we would have shifted our judgments on some measures?

Some of the more squishy adjective scales, which didn’t rise to the most important distinctions, include soft to wild, gentle to aggressive, and happy to sad. These also showed a preference toward the positive member of these evaluative factors for the idealized robots. I wonder if today’s AI bots, given the misgivings being expressed about them, would be shifted in people’s ratings more toward the negative member. Would we maybe judge today’s bots as more wild, aggressive, and sad?

Likewise on the potency factor, we might prefer AI bots to come across as less determining and more reserved, less reckless and more helpful. And on the activity factor, maybe less active and more passive, less aggressive and more gentle.

These hidden characteristics of the AI bots of today and those being conceived and developed for the next evolving generation are not directly susceptible to programming instruction and are opaque to training exercises. How exactly does the human driving the AI bot’s algorithms make it known to the bot how the human world regards it? How do we instruct it to be more gentle and less reckless?

What adjectives mean is socially constructed; that is, not directly susceptible to perception. You know it when you feel it in your brain. By implication, then, affixing a particular label to an act or generalizing that label to a person comes about through the human perceptual apparatus delivering data to our brains, where the data is processed and filtered and sent to our language center to receive some adjective.

That was mean what you just did. I saw you do it, and you’re a mean person.

These sorts of interpretations and judgment calls are mediated by our bodies, our fight or flight responses, our hormone levels, our pains and smiles, our bodily memory, none of which an AI bot has. What’s it like to have a brain without a body?

I can order you, if you’re a human, to be nicer, and you have a decent if not perfect idea of what you have to do. But how do I tell that to a bot? How do I feed it the results of a semantic differential on how people perceive it or how they’d like it to change and make that intelligible to it? Doing that lies in the domain of consciousness and sentience, human properties that we still don’t have a firm grasp of ourselves.

It’s good to be human and have these “brain and body tools” at our disposal. They serve us by facilitating how we interact with our human and natural environments. Ultimately they’re responsible for us being able to leave the world better than how we found it. They can also screw things up greatly, of course. But without our bots possessing these tools, how do we know for sure they’re acting for good or evil purposes? After all, these are adjectives that the bots don’t have any inherent ability to understand.

Science fiction speculates repeatedly that we will endow bots with the capacity to make the world a better place — better for themselves, that is, but worse for us. Remember HAL saying, “I’m sorry Dave, I’m afraid I can’t do that.”

We’re facing a major conundrum and we’re somewhere between embracing and ignoring it in our haste to get a positive return on our AI investment. Our strategy is to hope our uncertainties eventually will resolve themselves happily. We’re keeping our fingers crossed.

Or maybe we’re hoping that the bots will become intelligent enough to resolve these complex issues for us. Damn the torpedoes, full steam ahead into the Matrix. But hoping our way out of the uncertainty, like what we do with our thoughts and prayers, is not something a bot can do. It doesn’t usually work for us either.

I wonder how a bunch of intelligent bots responding to a semantic differential of the word “human” would rate us on the cautious to reckless scale?

Similar Posts:

- None Found